Writing Job Scripts

Overview

Version

Picotte uses Slurm version 20.02.7

In short: everything depends, and everything is complex

Performance of codes depends on the code, the hardware, the specific computations being done with the code, etc. There are very few general statements that can be made about getting the best performance out of any single computation. Two different computations using the same program (say, NAMD) may have very different requirements for best performance. You will have to become familiar with the requirements of your workflow; this will take some experimentation.

Important

Do not try to use some other job control system within job scripts, e.g. a for loop repeatedly executing a program, a standalone process which monitors for "idle" nodes and then launches tasks remotely. To perform its function properly, Slurm expects each job to be "discrete". If your workflow involves running multiple jobs, either with or without interdependency between the jobs, please see below, and the documentation for job arrays.[1]

Everyone has different workflows and attendant requirements. It is not possible to make general examples and recommendations which will work in every case.

Disclaimer: this article gives fairly basic information about job

scripts. The full documentation should be consulted for precise details.

On the login nodes, the command "man sbatch" will give full

information. See sbatch documentation.[2]

Documentation

- Official Slurm quick start guide: https://slurm.schedmd.com/archive/slurm-20.02.7/quickstart.html

- CAUTION errors have been found in the official documentation; if

something behaves in an unexpected way, do some experimentation to

figure out what is going on.

- In particular:

- there is no

--ntasks-per-gpuoption - the

--cpus-per-gpuoption does not properly allocate the number of CPUs requested: it only gives 1 no matter what value is specified

- there is no

- In particular:

File Format

If you created/edited your job script on a Windows machine, you will

first need to convert it to Linux format to run. This is due to the

difference in the handling of End Of Line.[3] Convert a

Windows-generated file to Linux format using the dos2unix command:

[juser@picotte001 ~]$ dos2unix myjob.sh

dos2unix: converting file myjob.sh to UNIX format ...

Glossary

See the Slurm Glossary for the meanings of various terms specified in the resource requests.

Basics

A (batch) job script is a shell script[4] with some special comment

lines that can be interpreted by the scheduler, Slurm. Comment lines

which are intepreted by the scheduler are #SBATCH, starting at the

first character of a line. Here is a simple one:

#!/bin/bash

#

### select the partition "def"

#SBATCH --partition=def

### set email address for sending job status

#SBATCH --mail-user=user@host

### account - essentially your research group

#SBATCH --account=fixmePrj

### select number of nodes

#SBATCH --nodes=16

### select number of tasks per node

#SBATCH --ntasks-per-node=48

### request 15 min of wall clock time

#SBATCH --time=00:15:00

### memory size required per node

#SBATCH --mem=6GB

### You may need this if there are settings in your .bashrc

### e.g. setup for Anaconda

. ~/.bashrc

### Whatever modules you used (e.g. picotte-openmpi/gcc)

### must be loaded to run your code.

### Add them below this line.

# module load FIXME

echo "hello, world"

All #SBATCH options/parameters have abbreviated versions, e.g. "--account" can be abbreviated to "-A". See the man page or online documentation[5] for details.

Shebang

Shebang (from hash-bang, #!) defines the shell under which the job script is to be interpreted. We recommend using bash. This must be the very first line of the file, starting with the very first character.

#!/bin/bash

Partition

A "partition" in Slurm is equivalent to a "queue" in Grid Engine (on

Proteus). To specify what partition your job runs under, use the option

--partition/-p.

In the command line, this would like:

[juser@picotte001 ~]$sbatch --partition=partitionmy_job.sh

In a job script, you can specify the partition similarly:

#SBATCH --partition=partition

Account/Project

An "account" in Slurm is equivalent to a "project" in Grid Engine (Proteus). The "account" is an abstract grouping used in the share tree scheduler to determine job scheduling priority based on historical use. See: Job Scheduling Algorithm

#SBATCH --account=myresearchPrj

Single Multithreaded Program

A single multithreaded program which will use multiple CPU cores should request a single node, a single task, and multiple CPUs per task. E.g. a multithreaded Stata job to run on 8 cores:

#SBATCH --nodes=1

#SBATCH --ntasks=1

#SBATCH --cpus-per-task=8

MPI Parallel Applications

MPI launches multiple copies of the same program which communicate and coordinate with each other. Each instance of the program, called a rank in MPI jargon, is a task in Slurm jargon. Here is an example where a 192-task MPI program is run over 4 nodes:

#SBATCH --nodes=4

#SBATCH --ntasks-per-node=48

NOTE this sense of task is different from that used in job arrays, where each "sub-job" in the job array is also called a task.

For hybrid MPI-OpenMP programs, these values will have to be modified

based on the number of threads each MPI rank (task) will run. The option

--ncpus-per-task will need to be specified. Here, a "cpu" is a CPU

core. For optimal performance, each thread of execution should get its

own CPU core.

For example, for a hybrid job that runs:

- 3 nodes

- 12 ranks per node

- 4 threads per rank

#SBATCH --nodes=3

#SBATCH --ntasks-per-node=12

#SBATCH --cpus-per-task=4

NOTE do not use these values for your own job. You should benchmark (i.e. try various combinations of tasks and cpu-cores) to figure out optimal setting for your specific application.

Slurm offers more control over placement of tasks, e.g. to take

advantage of NUMA. Please see the sbatch documentation,[6] and search

for the "--mem-bind" option and "NUMA". This is done so that threads

of execution remain on a specific CPU core rather than having to migrate

between different CPU cores.

The number of ranks per node and CPUs (cores) per rank need to match the physical architecture of the CPUs. All CPUs on Picotte, except for the big memory nodes, are Intel Xeon Platinum 8268. See Picotte Hardware and Software for more detail.

GPU Applications

GPU-enabled nodes are available in the gpu partition. You will need to

request the number of GPU devices needed, up to 4 per node:

#SBATCH --partition=gpu

#SBATCH --gpus-per-node=4

Like NUMA in MPI applications, process placement for GPU applications

can be controlled such that the application thread which communicates

with a GPU device runs on the CPU core which is "closest" to the device.

See the sbatch[7] documentation and search for the "--gpu-bind"

option.

To enforce proper CPU affinity for performance reasons:

#SBATCH --gres-flags=enforce-binding

Memory

You can request a certain amount of memory per node with:

#SBATCH --mem=1200G

Or a certain amount of memory per CPU (core):

#SBATCH --mem-per-cpu=4G

For more detailed information on memory in Linux, and implications for Slurm, see the Berlin Institute of Health HPC Docs -- Slurm -- Memory.

Specific Number of Nodes

In order to specify the number of nodes, use the option "--nodes".

#SBATCH --nodes=16

See Picotte Hardware and Software for details of compute nodes.

Hardware Limitations

It is easy to request an amount of resources that renders a job impossible to run. Please see the article on Picotte Hardware and Software for what actual resources are available.

In addition, on each compute node, the system itself takes up some memory, typically about 1 GiB to 5 GiB. If a job requests a (m_mem_free, nslots) pair which works out to the total installed RAM, the job will not be able to run. Not all the memory is free because some are being used by the system.

Resource Limits

There some limits in place to ensure fair access for all users. Please see: Job Scheduling Algorithm#Current_Limits.

Modules

In general, you should NOT need the lines:

. /etc/profile.d/modules.sh

module load shared

module load gcc

module load slurm

Without modifying your login scripts, i.e. .bashrc, or

.bash_profile, the following modules should be loaded automatically:

1) shared 2) DefaultModules 3) gcc/9.2.0 4) slurm/picotte/20.02.6 5) default-environment

Environment Variables

Slurm sets some environment variables in every script and begin with

"SLURM_". One important thing to note is that Slurm has two types of

environment variables: INPUT and OUTPUT. INPUT variables are

read and used during execution. They override options set in the job

script but are overridden by options set in the command line. OUTPUT

variables are set by the Slurm control daemon and cannot be overridden.

Both types contain information about the runtime environment.

NOTE There are two meanings of the word "task" below.

- A "task" is a "sub-job" of an array job, i.e. an array job consists

of multiple tasks. Related variables are named

"

SLURM_ARRAY_TASK_*" - A "task" is one rank of an MPI program. Each task may also be

multithreaded, which requires requesting

--cpus-per-task> 1.

| Output Environment Variable | Meaning |

|---|---|

SLURM_JOB_ID |

Job ID. For an array job, this is the sum of SLURM_ARRAY_JOB_ID and SLURM_ARRAY_TASK_ID. |

SLURM_JOB_NAME |

Job name |

SLURM_SUBMIT_DIR |

Directory job was submitted from |

SLURM_JOB_NODELIST == SLURM_NODELIST |

List of node names (strings) allocated to the job |

SLURM_JOB_NUM_NODES == SLURM_NNODES |

Number of nodes assigned to the job |

SLURM_CPUS_PER_TASK |

Number of CPU cores requested per task (e.g. MPI rank) of your job. Only set if the "--cpus-per-task" option is specified. |

SLURM_NPROCS |

Total number of CPU cores assigned to your job |

SLURM_TASKS_PER_NODE |

Number of tasks per node; e.g. number of MPI ranks, |

SLURM_ARRAY_JOB_ID |

Job ID of an array job |

SLURM_ARRAY_TASK_ID |

Index number of the current array task |

See sbatch documentation[8] for full details.

Output

Say you have a job my_job.sh. By default, the output and error will be sent to the same file. That file will look something like this:

slurm-NNNNNN.out

where NNNNNNN is the job ID number.

You can change the name of this file with the "-o" option:

[juser@picotte001 ~]$ sbatch -ooutput_filemy_job.sh

Additionally, if you want to separate the output and error files then you can use the "-e" option to specify a location for the error output:

[juser@picotte001 ~]$ sbatch -eerror_filemy_job.sh

Storage

There are three classes of storage attached to all nodes. Certain classes of storage may be more suitable for certain types of workload.

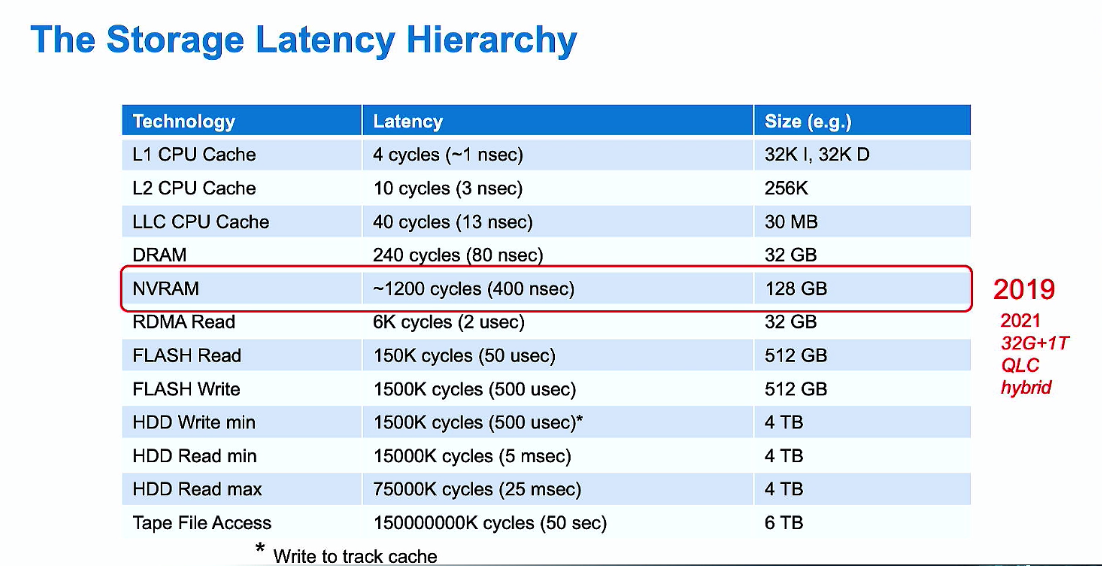

This list shows the latency of various types of memory and storage technology:

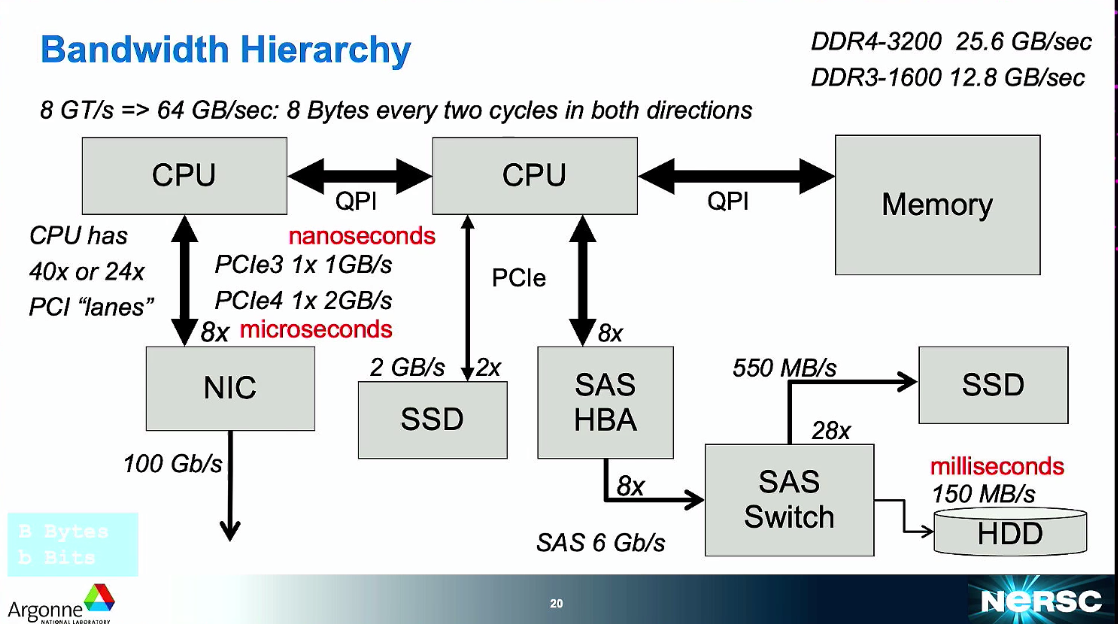

And this list shows the bandwidth hierarchy:

Persistent (NFS) Storage

Persistent storage is a network-attached storage for long-lived data.

All group data directories (/ifs/groups/xxxGrp) and home directories

(/home/juser) are on the persistent storage system. These directories

appear on all nodes, i.e. on any node, you will see your home directory

and group directory.

However, for certain i/o-intensive workloads, this is not a suitable storage system. I/O operations are performed over the network, which has limits on bandwidth and higher latency than locally-attached storage. Overload of this storage system (e.g. caused by many jobs reading and/or writing to the group or home directory) will likely affect interactive users, causing lags in response.

Picotte's persistent storage is provided by an Isilon scale-out clustered storage system, using the Network File System (NFS) file sharing protocol. It is a "cluster" as there are 12 nodes (servers) which are aggregated to present a single filesytem mount. It is attached via the 10 Gbps Ethernet internal cluster network.

Local Scratch Storage (TMP)

Local scratch storage is storage (SSD or HDD) installed in each node. It is most like the hard drive in your own PC. Because this is local storage, and so does not involve writing data over the network and waiting for a storage server to respond, it is fast. It is faster using the home or group directories.

Amount of local scratch storage varies by node. Picotte nodes have

between 874 GB and 1.72 TB. (See output of "sinfo" for the TMP_DISK

space.)

Every job has a local scratch directory assigned to it. The path is

given by the environment variable[9] TMP. Any job that runs has a

TMP directory is created for it:

TMP=/local/scratch/${SLURM_JOB_ID}

The environment variable TMPDIR is a synonym for TMP.

This directory is automatically deleted (including its contents) when the job ends.

Fast Parallel Shared Scratch Storage (BEEGFS_TMPDIR)

For jobs which have large (distributed) i/o demands, the BeeGFS shared scratch storage should be suitable. BeeGFS is a software-defined parallel (or clustered) storage system.[10][11] In this sense, it is similar to the Isilon persistent storage cluster. However, the BeeGFS system is connected to Picotte on its internal 100 Gbps HDR Infiniband network.

Every job will have a parallel scratch directory created, given by the environment variable:

BEEGFS_TMPDIR

which resolves to:

/beegfs/scratch/${SLURM_JOB_ID}

As with the local scratch directory, this BeeGFS scratch directory is automatically deleted at the end of the job.

/tmp Directory or Temporary Disk Space

Many programs will default to using /tmp for files created and used

during execution. This is usually configurable by the user. Please use

local scratch instead of /tmp: see the next section for the

appropriate environment variables you can use. If the program you use

follows convention and uses the environment variable $TMP, then it

should work fine since that points to a job-specific local scratch

directory.

Additionally, you may be able to configure the program to delete tmp files after completing.

Single Job Doing a Lot of I/O

Your home directory, and your research group directory (/ifs/groups/myresearchGrp), are NFS shared filesystems, mounted over the network.

If your job does a lot of file I/O (reads a lot, writes a lot, or both; where "a lot" means anything over a few hundred megabytes), and your job resides only on a single execution host, i.e. it is not a multi-node parallel computation, it may be faster to stage your work to the local scratch directory.

Each execution host has a local directory of about 850 GiB; the GPU and big memory nodes have about 1.7 TiB in RAID0 (striped). Since this is a local drive, connected to the PCI bus of the execution host, i/o speeds are much faster than i/o into your home directory or your group directory.

In the environment of the job, there are two environment variables which give the name of a job-specific local scratch directory:

$TMP

$TMPDIR

The directory name includes the job ID, and this directory is automatically deleted at the end of the job.

In your job script, you move all necessary files to $TMP, and then at

the end of your job script, you move everything back to

$SLURM_SUBMIT_DIR.

Please see the next section for a link to an example.

IMPORTANT This local scratch directory is automatically deleted and all its contents removed at the end of the job to which it is allocated.

Advanced

Job Arrays

When you want to launch multiple similar jobs, with no dependencies between jobs, i.e. the jobs may or may not run concurrently, you can use "array jobs".[12]

You can think of a job array as a for-loop in Slurm. Each subjob ("array task") is an iteration, and all tasks have the same resource request (number of nodes, amount of memory, amount of time, etc.)

If you want to run many jobs, say, more than 50 or so up to some millions, you must use an array job. This is because using an array job drastically reduces the load on the scheduler. The scheduler can reuse information over all the tasks in the array job, rather than keeping a complete record of every single separate job submitted.

Each "subjob" is called an "array task". To start an array job use the option "--array"

#SBATCH --array=n[-m[:s]][%c]

where:

- n -- start ID

- m -- end ID

- s -- step size

- c -- maximum number of concurrent tasks

| Env. Variable | Meaning |

|---|---|

SLURM_ARRAY_JOB_ID |

First job ID of the array |

SLURM_ARRAY_TASK_ID |

Job array index value |

SLURM_ARRAY_TASK_COUNT |

Number of tasks in the job array |

SLURM_ARRAY_TASK_MAX |

Highest job array index value |

SLURM_ARRAY_TASK_MIN |

Lowest job array index value |

SLURM_ARRAY_TASK_STEP |

Step size of the array |

Array jobs should also enable "requeue". This enables individual tasks

to restart if they end unexpectedly, e.g if a node crashes. To enable

restart, set "--requeue"

You can also throttle the number of simultaneous tasks to run by using

the "%c" affix. So,

### run no more than 25 tasks simultaneously

#SBATCH --array=1-500%25

And you can combine a step size with the throttle:

#SBATCH --array=1-999:2%20

IMPORTANT

- All tasks in the array are identical, i.e. every single command that

is in the job script will be executed by every single task. The

tasks in the array are differentiated by two things:

- the environment variable SLURM_ARRAY_TASK_ID,

- its own TMP environment variable (which is the full path to the task-specific local scratch directory).

- You can think of the line "#SBATCH --array=1-100:2" as the head of the for loop, i.e. the equivalent of "for i = 1; i <= 100; i = i+2". The body of the job script is then the body of the "for-loop"

Here is a simple example of 100 tasks, which prints the SLURM_ARRAY_TASK_ID and value of TMP of each task:

#!/bin/bash

#SBATCH --partition=def

#SBATCH --account=fixmePrj

#SBATCH --time=0:05:00

#SBATCH --mem=256M

#SBATCH --requeue

#SBATCH --mail-user=fixme@drexel.edu

#SBATCH --array=10-1000:10

### name this file testarray.sh

echo $SLURM_ARRAY_TASK_ID

echo $TMP

The result is 100 files named slurm-NNNNN_MMM.out, where NNNNN is

the Job ID, and MMM is the Task ID, from the range {10, 20, ..., 1000}.

Each file contains a single integer, from {10, 20, ..., 1000}.

N.B. SLURM_ARRAY_TASK_ID is not "zero-padded", i.e. they are in the sequence 1, 2, 3, ..., 10, 11, ... instead of 01, 02, 03, ...

Job Dependencies

You may have a complex set of jobs, where some later jobs depend on the output of some earlier jobs. The --dependency option (also use -d) allows you to tell Slurm to start a job based on the status of another job.

sbatch --dependency=:[:...] myJob.sh

The

The

- after - Can begin after the specified job starts running

- afterok - Can begin running after the specified jobs end successfully

- afternotok - Can begin running after the specified job ends with failure

- afterany - Can begin running after the specified job ends, regardless of status.

For more complicated dependency scenarios, have a look at SnakeMake.

Job Arrays or Multiple Jobs Doing I/O to Same Directory

For best performance for multi-node parallel jobs, it is better to run

the job from a temporary directory in /scratch. Write a dependent job

to run after the completion of the i/o-intensive job(s) to copy the

outputs from /scratch back to the groups directory.

The /scratch directory is shared to all nodes, and is hosted on the

fast BeeGFS filesystem. Just like local

scratch, shared scratch directories are automatically deleted at the end

of the job.

For manually-created BeeGFS scratch directories, files which have not been accessed in 45 days are deleted. If the filesystem is filled up, data will be deleted to make space.

There may be more than one way to do this.

No-setup Way

Automatically Created

Just like the local scratch directory $TMP or $TMPDIR above, a

BeeGFS scratch directory is created automatically for every job. Its

path is given by the environment variable

BEEGFS_TMPDIR

Like local scratch, this directory and its contents are automatically deleted at the end of the job.

Manually Created

- Create a directory for yourself in

/beegfs/scratch:

[juser@picotte001]$ mkdir /beegfs/scratch/myname

[juser@picotte001]$ cd /beegfs/scratch/myname

- Manually cp your files to that directory.

Setup

- All your job files (program, input files) are in

~/MyJobThis can be considered a sort of "template" job directory. - Your job script will have to copy the appropriate files to

/scratch/$USER/$SLURM_JOB_ID, and then copy the outputs back once the job is complete

Script Snippet

NB This snippet may not work for your particular case. It is meant to

show one way of staging job files to /scratch. The details will vary

depending on your own particular workflow.

#!/bin/bash

#SBATCH --account=myresearchPrj

#SBATCH --nodes=4

...

module load picotte-openmpi/gcc

export PROGRAM=~/bin/my_program

# copy data files (NOT executables) to staging directory, and run from there

cp -R ./* $BEEGFS_TMPDIR

cd $BEEGFS_TMPDIR

# This script uses OpenMPI. "--mca ..." is for the OpenMPI implementation

# of MPI only.

# "--mca btl ..." specifies preference order for network fabric

# not strictly necessary as OpenMPI selects the fastest appropriate one first

echo "Starting $PROGRAM in $( pwd ) ..."

$MPI_RUN --mca btl openib,self,sm $PROGRAM

# create job-specific subdirectory and copy all output to it

mkdir -p $SLURM_SUBMIT_DIR/$SLURM_JOB_ID

cp outfile.txt $SLURM_SUBMIT_DIR/$SLURM_JOB_ID

# the Slurm output files are in $SLURM_SUBMIT_DIR

cd $SLURM_SUBMIT_DIR

mv *.o${JOB_ID} *.e${JOB_ID} *.po${JOB_ID} *.pe${JOB_ID} $SLURM_SUBMIT_DIR/$SLURM_JOB_ID

# staging directory BEEGFS_TMPDIR is automatically deleted

All the known output files will be in a subdirectory named

$SLURM_JOB_ID (some integer) in the directory where the sbatch command

was issued. So, if your job was given job ID 637, all the output would

be in ~/MyJob/637/.

sbatch Options

Various sbatch options can control job parameters, such as which file output is redirected to, wall clock limit, shell, etc. Please see Slurm Quick Start Guide for some common parameters.

mpirun Options

Currently, only OpenMPI is available.

OpenMPI - picotte-openmpi

mpirun myprog

Modifying Job Arrays

The scontrol command can be used to modify job arrays while tasks are

running. For example, if there is a large array of jobs with a mix of

running and pending tasks, one can modify the `throttle`, i.e. limit

on the number of simultaneously running tasks:

scontrol update JobId=1234567 ArrayTaskThrottle=50

Modules

Environment Modules set up the environment for the job to run in. At the very least, you should have these modules loaded:

shared

gcc

If you used GCC with OpenMPI, you would also need:

picotte-openmpi/gcc/4.1.0

So, your job script would need to have:

module load picotte-openmpi/gcc/4.1.0

Module-loading is order-specific. Modules which are depended on by others must come earlier.

Examples

References

[1]

[2]

[3] Wikipedia article on Newline

[4] To see why we default to using bash, please read Csh Programming Considered Harmful

[5] Slurm Documentation -- sbatch (Be sure to view the page corresponding to the Slurm version in use on Picotte.)

[6]

[7]

[8]

[9] What are environment variables in Bash? (opensource.com)

[10] BeeGFS website