Slurm

This article will describe some of the more commonly used command-line tools for Slurm. Also, see the Slurm User Guide. [1]

Overview

Current version on Picotte: 20.02.6

Components of Slurm

Slurm has several components which interact (see diagram):

Slurm has several components which interact (see diagram):

- slurmctld: manages resources and jobs

- slurmd: one slurmd daemon process runs on each compute node. It accepts a job assignment from slurmctld, and manages that job during its lifetime

- "s" commands: commands used by the end-user to submit and manage jobs.

- slurmdbd: records accounting information for multiple Slurm-managed clusters in a single database

- slurmrestd: optional daemon which is used to interact with Slurm through its REST API

Things Slurm Can Do

- Run multiple jobs with a single script.

- Chain jobs to such that one job's start is dependent on another job's completion.

Things NOT TO DO

- DO NOT write a shell loop in a job script. Use a job array, instead. Examples:

- Similarly, DO NOT write loops in any other scripting language (Python, Perl, R, etc.) to run separate jobs.

Common Use Cases

- Running Slurm Batch Jobs

- Interactive Terminal Session on Compute Node

- Running GUI Applications on Compute Nodes

Commands

Documentation for all these commands are available as man pages: type

“mancommand”. They are also available on the web at

https://slurm.schedmd.com/𝘤𝘰𝘮𝘮𝘢𝘯𝘥.html, e.g. the documentation for

sbatch is at https://slurm.schedmd.com/sbatch.html

The summary of options on this page is not definitive. Slurm commands have many options, some with interdependencies. Please consult the official documentation for exact definitions.

Local Utility Scripts and Aliases

Slurm commands are comprehensive and provide a wealth of output. To help with usability, we have defined some generally useful aliases which provide more than the default brief output, but less than the comprehensive full output.

To use these local aliases, load the modulefile:

slurm_util

For details, see: Slurm Utility Commands

sbatch

sbatch[2] is used to submit job scripts to Slurm for later execution. The script may contain multiple srun commands in order to launch parallel tasks.

Options have a short (single-letter) and a long form. The short form does not need the "=" sign, while the long form does. E.g. "-N 4" is equivalent to "--nodes=4"

Some common options are listed below. This summary is incomplete and not definitive. Consult the official documentation, either the man page for sbatch, or the web version:

| Option | Meaning | Example |

|---|---|---|

-A, --account=account |

Identifies which account the job should be charged to. | -A somethingPrj |

-D, --chdir=dir |

Set the work directory to the specified directory before executing the job script. If unspecified, the directory where the sbatch command is issued is used. |

-D /ifs/groups/somethGrp/myname/ |

-p, --partition=part |

Specifies what partition the job will run on. If unspecified, the "def" partition will be used. |

-p def |

-N, --nodes=numNodes |

Specify the number of nodes to be allocated for the job | -N 16 |

-n, --ntasks=count |

sbatch does not launch tasks, it requests an allocation of resources and submits a batch script. This option tells Slurm that job steps within the allocation will launch a maximum of count tasks, and to provide sufficient resources. The default is one task per node. | --ntasks=4 |

-c, --cpus-per-task=count |

Advise Slurm that the job will require count number of CPU cores per task. (The default value is 1.) | --cpus-per-task=12 |

-t, --time=hh:mm:ss |

Specify the amount of time to allocate for the job | -t 24:00:00 |

--mem=size[units] |

Specify the real memory required per node. Default units are megabytes. Different units can be specified using one of the suffixes "K", "M", "G", or "T". NOTE: The "--mem", "--mem-per-cpu", and "--mem-per-gpu" options are mutually exclusive. ~~Specify how much memory to allocate for the job. Memory is allocated per node.~~ |

--mem=8GB |

--mem-per-cpu=size[units] |

Minimum memory required per allocated CPU. Default units are megabytes. The default value is 3900 MB. | |

--mem-per-gpu=size[units] |

Minimum (node) memory required per allocated GPU. Default units are megabytes. | |

--mail-type= |

Notify the user by mail when an event type occurs. Acceptable types are: NONE, BEGIN, END, FAIL, REQUEUE, STAGE_OUT, ALL, and ARRAY_TASKS. | --mail-type=BEGIN,END,FAIL |

--mail-user=user@host |

Set email address to receive job status. N.B. Drexel's Outlook mail servers will drop Picotte emails, so use an external email address. | --mail-user=juser@gmail.com |

-i, --input=input_file |

input_file will be used as input for the job | -i /ifs/groups/myGrp/data/awesomedata.csv |

-o, --output=output_file |

job output will be written to output_file | -o /ifs/groups/myGrp/juser/job_outputs/awesomejob.out |

-e, --error=error_file |

job error will be written to error_file | -e /ifs/groups/myGrp/juser/job_outputs/sadjob.err |

--gres=gpu:N |

Request N GPU devices (cards). N can be up to 4. | --gres=gpu:2 |

Options may be passed on the command line:

[juser@picotte001 ~]$ sbatch -N 4 -t 12:00:00 --mem=2GB myjob.sh

or set in the job script:

#SBATCH -N 4

#SBATCH -t 12:00:00

#SBATCH --mem=2GB

Options passed on the command line override the settings embedded in the job script.

Type "man sbatch" at the command line for full details.

salloc

salloc[3] is used to allocate resources of a job in real-time. It will spawn a new shell with appropriate Slurm environment variable set. Once the resources have been allocated, you may ssh directly to the nodes allocated.

srun

srun[4] is used to to submit a job for execution or initiate job steps in real-time. Example, get a shell on a compute node:

[juser@picotte001 ~]$ srun -N 1 --mem=32G --pty /bin/bash

[juser@node001 ~] $

(Or you can use "/bin/bash -l" which gives a new login shell; useful

if you have separated settings into .bash_profile for environment

variables, and other things into .bashrc.)

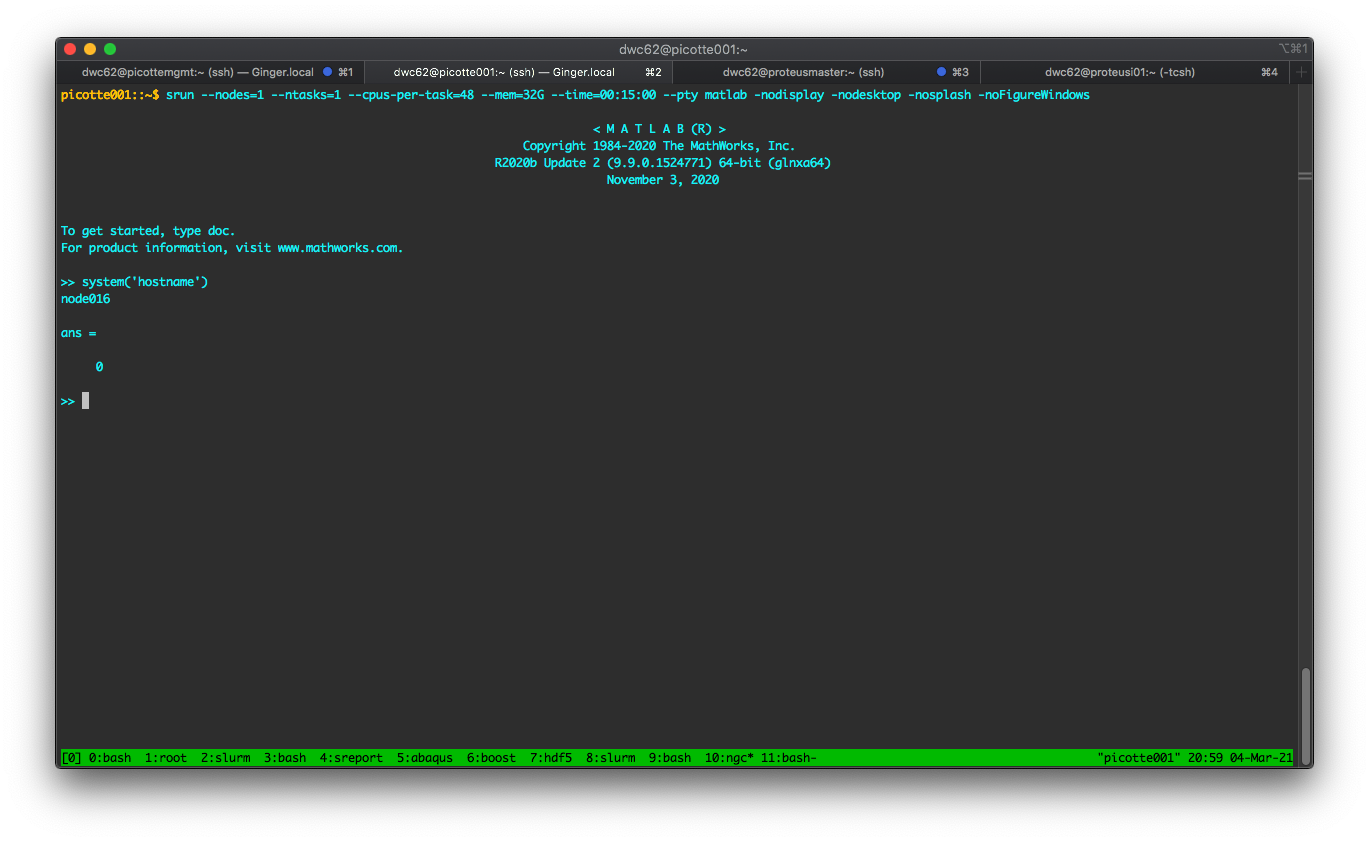

Example: run Matlab (terminal UI) using 48 cores on a node:

[juser@picotte001 ~]$ module load matlab

[juser@picotte001 ~]$ srun --nodes=1 --ntasks=1 --cpus-per-task=48 --mem=32G --time=00:15:00 --pty matlab -nodisplay -nodesktop -nosplash -noFigureWindows

Unfortunately, there are unresolved issues with running MPI programs

with srun, which means one cannot do:

[juser@picotte001 ~]$ srun ... some_mpi_program

To run MPI programs, a batch script must be used, executing the program

with "mpirun".

squeue

squeue[5] is used to report the state of jobs or job states. The option "-j" will show the status on a provided job.

[juser@picotte001 ~]$ squeue -j 127

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

127 all test.sh juser R 1:49:21 1 r569

The option "--me" will show all your own jobs.

The option "-u" will show all jobs for a given user.

[juser@picotte001 ~]$ squeue -u juser

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

127 all test.sh juser R 1:49:21 1 r569

128 all test.sh juser R 1:50:08 1 r569

sjstat

sjstat displays statistics of jobs. Documentation is via "-h" for

a brief help message, and the man page.

Basic usage:

[juser@picotte001 ~]$ sjstat

Scheduling pool data:

-------------------------------------------------------------

Pool Memory Cpus Total Usable Free Other Traits

-------------------------------------------------------------

bm 1546000Mb 48 2 2 2

def* 192000Mb 48 74 74 69

gpu 192000Mb 48 12 12 12

long 192000Mb 48 74 74 69

gpulong 192000Mb 48 12 12 12

Running job data:

----------------------------------------------------------------------

JobID User Nodes Pool Status Used Master/Other

----------------------------------------------------------------------

841713 mb3544 1 def R 13:05:12 node001

841766 wc492 1 def R 1:16 node001

841756 wc492 1 def R 1:22:22 node001

841758 rs3597 1 def R 58:51 node001

841551 aag99 1 def R 19:41:48 node002

841550 aag99 1 def R 19:42:05 node041

841513 aag99 1 def R 1-00:44:17 node005

841514 aag99 1 def R 1-00:44:17 node040

scancel

scancel[6] is used to cancel a pending or running job or job step.

[juser@picotte001 ~]$ sbatch testJob.sh

Submitted batch job 127

[juser@picotte001 ~]$ squeue -u juser

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

127 RM testJob. cwf25 PD 0:00 1 (None)

[juser@picotte001 ~]$ scancel 127

[juser@picotte001 ~]$ squeue -u juser

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

[juser@picotte001 ~]$

sstat

sstat[7] shows status information on running jobs.

[juser@picotte001 ~]$ sstat --jobs 123456

... [very long output omitted] ...

sacct

sacct[8] is used to report accounting information on active or completed jobs.

Active job:

[juser@picotte001 ~]$ sbatch testJob.sh

Submitted batch job 127

[juser@picotte001 ~]$ sacct -j 127

JobID JobName Partition Account AllocCPUS State ExitCode

------------ ---------- ---------- ---------- ---------- ---------- --------

127 testJob.sh RM myGrp 28 RUNNING 0:0

Completed job:

[juser@picotte001 python]$ sacct -j 127

JobID JobName Partition Account AllocCPUS State ExitCode

------------ ---------- ---------- ---------- ---------- ---------- --------

127 testJob.sh RM myGrp 28 COMPLETED 0:0

seff

seff is undocumented. It can report "efficiency" statistics, i.e. how much resource was used as a percentage of that resource requested. This works on completed jobs. If reported efficiency is low, the resource requests (amount of memory, number of CPUs) should be reduced in future runs.

Example - a job which requested 16 cores (slots) and 128 GB of memory:

[juser@picotte001 ~]$ seff 12345

Job ID: 12345

Cluster: picotte

User/Group: juser/juser

State: OUT_OF_MEMORY (exit code 0)

Nodes: 1

Cores per node: 16

CPU Utilized: 06:50:30

CPU Efficiency: 11.96% of 2-09:10:56 core-walltime

Job Wall-clock time: 03:34:26

Memory Utilized: 1.54 GB

Memory Efficiency: 1.21% of 128.00 GB

sreport

sreport[9] is used to generate reports of job usage and cluster utilization for Slurm jobs saved to the Slurm Database, slurmdbd.

scontrol

scontrol[10] is used to view or modify Slurm configuration, and jobs (among other things). scontrol has a wide variety of uses, some of which are demonstrated below.

scontrol can be used to get information on the nodes Slurm manages. For an individual node use the command "scontrol show node node". Using "scontrol show nodes" will show all nodes in the cluster with each node's information being displayed in the below format.

[juser@picotte001 ~]$ scontrol show node node013

NodeName=node013 Arch=x86_64 CoresPerSocket=12

CPUAlloc=48 CPUTot=48 CPULoad=7.76

AvailableFeatures=(null)

ActiveFeatures=(null)

Gres=(null)

NodeAddr=node013 NodeHostName=node013 Version=20.02.6

OS=Linux 4.18.0-147.el8.x86_64 #1 SMP Thu Sep 26 15:52:44 UTC 2019

RealMemory=192000 AllocMem=0 FreeMem=178621 Sockets=4 Boards=1

State=ALLOCATED ThreadsPerCore=1 TmpDisk=174864 Weight=1 Owner=N/A MCS_label=N/A

Partitions=def,long

BootTime=2021-01-27T14:42:33 SlurmdStartTime=2021-01-27T14:44:20

CfgTRES=cpu=48,mem=187.50G,billing=48

AllocTRES=cpu=48

CapWatts=n/a

CurrentWatts=0 AveWatts=0

ExtSensorsJoules=n/s ExtSensorsWatts=0 ExtSensorsTemp=n/s

In order to get information about a job use the command "scontrol show job job_id". Here is an example of a job running on a GPU node:

[juser@picotte001 ~]$ scontrol show job 127

JobId=127 JobName=somejob

UserId=juser(1002) GroupId=dwc62(1002) MCS_label=N/A

Priority=2012 Nice=0 Account=someprj QOS=normal WCKey=*

JobState=RUNNING Reason=None Dependency=(null)

Requeue=1 Restarts=0 BatchFlag=1 Reboot=0 ExitCode=0:0

RunTime=00:00:46 TimeLimit=1-00:00:00 TimeMin=N/A

SubmitTime=2021-04-29T11:48:35 EligibleTime=2021-04-29T11:48:35

AccrueTime=2021-04-29T11:48:35

StartTime=2021-04-29T11:48:35 EndTime=2021-04-30T11:48:35 Deadline=N/A

SuspendTime=None SecsPreSuspend=0 LastSchedEval=2021-04-29T11:48:35

Partition=gpu AllocNode:Sid=picotte001:27308

ReqNodeList=(null) ExcNodeList=(null)

NodeList=gpu001

BatchHost=gpu001

NumNodes=1 NumCPUs=1 NumTasks=1 CPUs/Task=1 ReqB:S:C:T=0:0:*:*

TRES=cpu=1,node=1,billing=43,gres/gpu=1

Socks/Node=* NtasksPerN:B:S:C=0:0:*:* CoreSpec=*

MinCPUsNode=1 MinMemoryNode=0 MinTmpDiskNode=0

Features=(null) DelayBoot=00:00:00

OverSubscribe=OK Contiguous=0 Licenses=(null) Network=(null)

Command=/home/juser/somejob.sh

WorkDir=/home/juser

StdErr=/home/juser/slurm-127.err

StdIn=/dev/null

StdOut=/home/juser/slurm-127.out

Power=

MemPerTres=gpu:40960

TresPerNode=gpu:1

MailUser=juser MailType=NONE

scontrol can also be used to hold and release jobs.

[juser@picotte001 ~]$ sbatch testJob.sh

Submitted batch job 128

[juser@picotte001 ~]$ scontrol hold 128

[juser@picotte001 ~]$ squeue -u juser

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

128 def testJob. juser PD 0:00 1 (JobHeldUser)

[juser@picotte001 ~]$ scontrol release 128

[juser@picotte001 ~]$ squeue -u juser

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

128 def testJob. juser R 0:06 1 node023

scontrol can also modify aspects of a job, like run time, and the task throttle of an array job. To modify the throttle on an array job:

scontrol update JobId=ArrayTaskThrottle=

e.g.

scontrol update JobID=12345678 ArrayTaskThrottle=50

This modifies the throttle, but leaves tasks which are already running alone. It just reduces the number of simultaneous tasks that can run.

One other thing that scontrol can do is display information about the partition. For a specific partition use "scontrol show partition part", where part is the name of the partition you want information on. Not specifying a partition will return information on all partitions managed by Slurm.

[juser@picotte001 ~]$ scontrol show partition def

PartitionName=def

AllowGroups=ALL AllowAccounts=ALL AllowQos=ALL

AllocNodes=ALL Default=YES QoS=N/A

DefaultTime=00:30:00 DisableRootJobs=NO ExclusiveUser=NO GraceTime=0 Hidden=NO

MaxNodes=50 MaxTime=2-00:00:00 MinNodes=1 LLN=NO MaxCPUsPerNode=UNLIMITED

Nodes=node[001-074]

PriorityJobFactor=1 PriorityTier=1 RootOnly=NO ReqResv=NO OverSubscribe=NO

OverTimeLimit=NONE PreemptMode=OFF

State=UP TotalCPUs=3552 TotalNodes=74 SelectTypeParameters=NONE

JobDefaults=(null)

DefMemPerCPU=3900 MaxMemPerNode=UNLIMITED

TRESBillingWeights=CPU=1.0,GRES/gpu=0,Mem=0

sinfo

sinfo[11] is used to report on the state of the partitions and nodes managed by Slurm.

[juser@picotte001 ~]$ sinfo

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

bm up 21-00:00:0 2 idle bigmem[001-002]

def* up 2-00:00:00 1 mix node002

def* up 2-00:00:00 12 alloc node[001,014-024]

def* up 2-00:00:00 61 idle node[003-013,025-074]

gpu up 1-00:00:00 9 mix gpu[001-005,009-012]

gpu up 1-00:00:00 3 idle gpu[006-008]

If you load the slurm_util[12] module, you will have the

sinfo_detail alias, which will produce output like:

[juser@picotte001 ~]$ sinfo_detail

NODELIST NODES PART STATE CPUS S:C:T MEMORY TMP_DISK WEIGHT AVAIL_FE REASON

bigmem001 1 bm mixed 48 4:12:1 1546000 174864 1 (null) none

bigmem002 1 bm idle 48 4:12:1 1546000 174864 1 (null) none

gpu001 1 gpu idle 48 4:12:1 192000 174864 1 (null) none

gpu002 1 gpu idle 48 4:12:1 192000 174864 1 (null) none

gpu003 1 gpu idle 48 4:12:1 192000 174864 1 (null) none

gpu004 1 gpu idle 48 4:12:1 192000 174864 1 (null) none

gpu005 1 gpu idle 48 4:12:1 192000 174864 1 (null) none

gpu006 1 gpu idle 48 4:12:1 192000 174864 1 (null) none

gpu007 1 gpu idle 48 4:12:1 192000 174864 1 (null) none

gpu008 1 gpu idle 48 4:12:1 192000 174864 1 (null) none

gpu009 1 gpu idle 48 4:12:1 192000 174864 1 (null) none

gpu010 1 gpu idle 48 4:12:1 192000 174864 1 (null) none

gpu011 1 gpu idle 48 4:12:1 192000 174864 1 (null) none

gpu012 1 gpu idle 48 4:12:1 192000 174864 1 (null) none

node001 1 def* allocated 48 4:12:1 192000 174864 1 (null) none

node002 1 def* allocated 48 4:12:1 192000 174864 1 (null) none

node003 1 def* allocated 48 4:12:1 192000 174864 1 (null) none

node004 1 def* allocated 48 4:12:1 192000 174864 1 (null) none

node005 1 def* allocated 48 4:12:1 192000 174864 1 (null) none

node006 1 def* allocated 48 4:12:1 192000 174864 1 (null) none

node007 1 def* allocated 48 4:12:1 192000 174864 1 (null) none

node008 1 def* idle 48 4:12:1 192000 174864 1 (null) none

node009 1 def* idle 48 4:12:1 192000 174864 1 (null) none

node010 1 def* idle 48 4:12:1 192000 174864 1 (null) none

...

node072 1 def* idle 48 4:12:1 192000 174864 1 (null) none

node073 1 def* idle 48 4:12:1 192000 174864 1 (null) none

node074 1 def* idle 48 4:12:1 192000 174864 1 (null) none

Recommendations

Single-node, single-threaded jobs

Do not specify any sbatch options related to nodes, tasks, or CPUs. The default behavior for a job is that it is single-node and single-threaded.

Single-node, multi-threaded jobs

Rather than specify some cobination of values for

"ntasks/ntasks-per-node" and "cpus-per-task", just use

"cpus-per-task" ("ntasks/ntasks-per-node" will take on their default

value of 1).

For example, to run a multithreaded process on a single node using 48 threads (1 thread per core):

#SBATCH --nodes=1

#SBATCH --ntasks=1

#SBATCH --cpus-per-task=48

Multi-node (MPI) jobs

For MPI jobs that will run on multiple nodes, it may be useful map

"ntasks-per-node" to the number of MPI ranks, and the

"cpus-per-task" to the number of threads per rank.

E.g. a 4 node job which will run 12 ranks per node, and 4 threads per rank:

#SBATCH --nodes=4

#SBATCH --ntasks-per-node=12

#SBATCH --cpus-per-task=4

GPU jobs

GPU-enabled programs will always have some part of its computation using CPUs. Since there are 4 GPU devices in each GPU node, and there are 48 cores in each GPU node, a sensible default is:

#SBATCH --partition=gpu

#SBATCH --nodes=4

#SBATCH --gres=gpu:4

#SBATCH --cpus-per-gpu=12

N.B. "cpus-per-gpu" and "cpus-per-task" are mutually exclusive: if

one is set, the other must not be set.

Partitions

In Slurm, a partition is a grouping of nodes. In Picotte, partitions are defined for node types (standard compute, big memory, GPU), and also for run time (the "long" partitions are for jobs which run for longer than 48 hours).

| Partition | Type of nodes | Notes |

|---|---|---|

| def | Standard compute (48-core, 187 GB RAM) | This is the default partition. Jobs which do not specify a partition are placed in "def". Up to 48 hours wallclock. |

| bm | Big memory (48-core, 1.5 TB RAM) | Up to 504 hours wallclock. Must request at least 200 GiB memory per node. |

| gpu | GPU compute (48-core, 187 GB RAM, 4x Nvidia Tesla V100) | GPU devices have to be requested with "--gres=gpu:N" Up to 36 hours wallclock. |

| long | Standard compute | Long job version of "def". Up to 192 hours of wallclock. |

| gpulong | GPU compute | Long job version of "gpu". Up to 192 hours of wallclock. |

Debugging Problems with Jobs

If your job is not running try resubmitting the job with the option "--test-only". This will validate your job script and provide an estimate of when the job would run. This does not run the job.

See Also

References

[2] Slurm Documentation - sbatch

[3] Slurm Documentation - salloc

[4] Slurm Documentation - srun

[5] Slurm Documentation - squeue

[6] Slurm Documentation - scancel

[7] Slurm Documentattion - sstat

[8] Slurm Documentation - sacct

[9] Slurm Documentation - sreport

[10] Slurm Documentation - scontrol