Proteus Hardware and Software

Hardware

The qhost command gives a snapshot of all hosts/nodes.

Login nodes

- Dell PowerEdge R720 server - Intel® Xeon® E5-2665 Sandy Bridge CPUs - 16 cores - 64 GB RAM

- Dell PowerEdge R715 server - AMD Opteron™ 6378 Piledriver CPUs - 32 cores - 128 GB RAM

Compute nodes

- 17 Dell PowerEdge C6220 chassis

containing 4 servers/chassis

- Intel® Xeon® E5-2670 Sandy Bridge CPUs - 16 cores/server (2 socket) - 64 GB RAM/server

- /proc/cpuinfo flags:

fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi

mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon

pebs bts rep_good xtopology nonstop_tsc aperfmperf pni pclmulqdq dtes64 monitor ds_cpl

vmx smx est tm2 ssse3 cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic popcnt

tsc_deadline_timer aes xsave avx lahf_lm ida arat epb xsaveopt pln pts dts tpr_shadow

vnmi flexpriority ept vpid

- 3 Dell PowerEdge C6220

containing 4 servers/chassis

- Intel® Xeon® E5-2665 Sandy Bridge CPUs - 16 cores/server (2 socket) - 64 GB RAM/server

- /proc/cpuinfo flags:

fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi

mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon

pebs bts rep_good xtopology nonstop_tsc aperfmperf pni pclmulqdq dtes64 monitor ds_cpl

vmx smx est tm2 ssse3 cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic popcnt

tsc_deadline_timer aes xsave avx lahf_lm ida arat epb xsaveopt pln pts dts tpr_shadow

vnmi flexpriority ept vpid

- 1 Dell PowerEdge C6220 II chassis

containing 4 servers

- Intel® Xeon® E5-2650 v2 Ivy Bridge CPUs - 16 core/server (2 socket) - 64 GB RAM/server

- /proc/cpuinfo flags:

fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi

mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon

pebs bts rep_good xtopology nonstop_tsc aperfmperf pni pclmulqdq dtes64 monitor ds_cpl

vmx smx est tm2 ssse3 cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic popcnt

tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm ida arat epb xsaveopt pln pts

dts tpr_shadow vnmi flexpriority ept vpid fsgsbase smep erms

- 1 Dell PowerEdge C6320

chassis containing 4 servers

- Intel® Xeon® E5-2650 v3 Haswell CPUs - 20 core/server (2 socket) - 128 GB RAM/server

- /proc/cpuinfo flags:

fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts

acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc

arch_perfmon pebs bts rep_good xtopology nonstop_tsc aperfmperf pni pclmulqdq dtes64

monitor ds_cpl vmx smx est tm2 ssse3 fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic

movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm ida arat epb

xsaveopt pln pts dts tpr_shadow vnmi flexpriority ept vpid fsgsbase bmi1 avx2 smep

bmi2 erms invpcid

- 1 Dell PowerEdge C6420

chassis containing 4 servers

- Intel® Xeon® Gold 6148 Skylake CPUs - 40 core/server (2 socket) - 192 GB RAM/server

- /proc/cpuinfo flags:

pu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts

acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc

arch_perfmon pebs bts rep_good xtopology nonstop_tsc aperfmperf pni pclmulqdq dtes64

monitor ds_cpl vmx smx est tm2 ssse3 fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic

movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch

ida arat epb xsaveopt pln pts dtherm tpr_shadow vnmi flexpriority ept vpid fsgsbase

bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm avx512f rdseed adx avx512cd

cqm_llc cqm_occup_llc

- 9 Dell PowerEdge C6145 chassis

containing 2 servers/chassis

- AMD Opteron™ 6378 Piledriver CPUs - 64 cores/server (4 socket) - 256 GB RAM/server

- /proc/cpuinfo flags

fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr

sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good

nonstop_tsc extd_apicid amd_dcm aperfmperf pni pclmulqdq monitor ssse3 fma cx16

sse4_1 sse4_2 popcnt aes xsave avx f16c lahf_lm cmp_legacy svm extapic cr8_legacy abm

sse4a misalignsse 3dnowprefetch osvw ibs xop skinit wdt lwp fma4 tce nodeid_msr tbm

topoext perfctr_core cpb npt lbrv svm_lock nrip_save tsc_scale vmcb_clean flushbyasid

decodeassists pausefilter pfthreshold bmi1

The cpuinfo flags/features may be used to determine compilation flags. See Compiling with GCC.

GPU Compute Nodes

- 8 Dell PowerEdge R720 servers

- [https://ark.intel.com/products/75269/Intel-Xeon-Processor-E5-2650-v2-20M-Cache-2-60-GHzIntel® Xeon® E5-2650 v2 Ivy Bridge CPUs] - 16 cores/server (2 socket) - 64 GB RAM/server

- /proc/cpuinfo flags

fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi

mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon

pebs bts rep_good xtopology nonstop_tsc aperfmperf pni pclmulqdq dtes64 ds_cpl vmx smx

est tm2 ssse3 cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes

xsave avx f16c rdrand lahf_lm arat xsaveopt pln pts dtherm tpr_shadow vnmi flexpriority

ept vpid fsgsbase smep erms

- Each node holds 2 NVIDIA Tesla K20Xm GPU devices (Kepler architecture)[1] - single Kepler GK110 GPU, 2688 CUDA cores, 6 GB GDDR5 memory

Storage

- High performance shared scratch: Dell/Intel Enterprise Edition for Lustre utilizing Lustre Parallel File System - 120 TB raw (88 TB usable) capacity utilizing QDR Infiniband providing aggregate 6 GB/s read, aggregate 3.5GB/s write performance

- Persistent storage: Dell HPC NFS Storage Solution - 180 TB raw capacity, 160 TB usable capacity

Backup Storage

- 585 TiB raw capacity, 464 TiB useable capacity

- 10 gigabit ethernet connectivity

Network

Network fabric: 4X quad data rate

Infiniband

(Mellanox) See also:

Total of 2432 compute cores, 4 GB RAM per core

Software

- Operating system: Red Hat Enterprise Linux 6 64-bit

- Job scheduler: Univa Grid Engine

- Compilers: Intel Composer XE, AMD x86 Open64 Compiler Suite, and GNU Compiler Collection

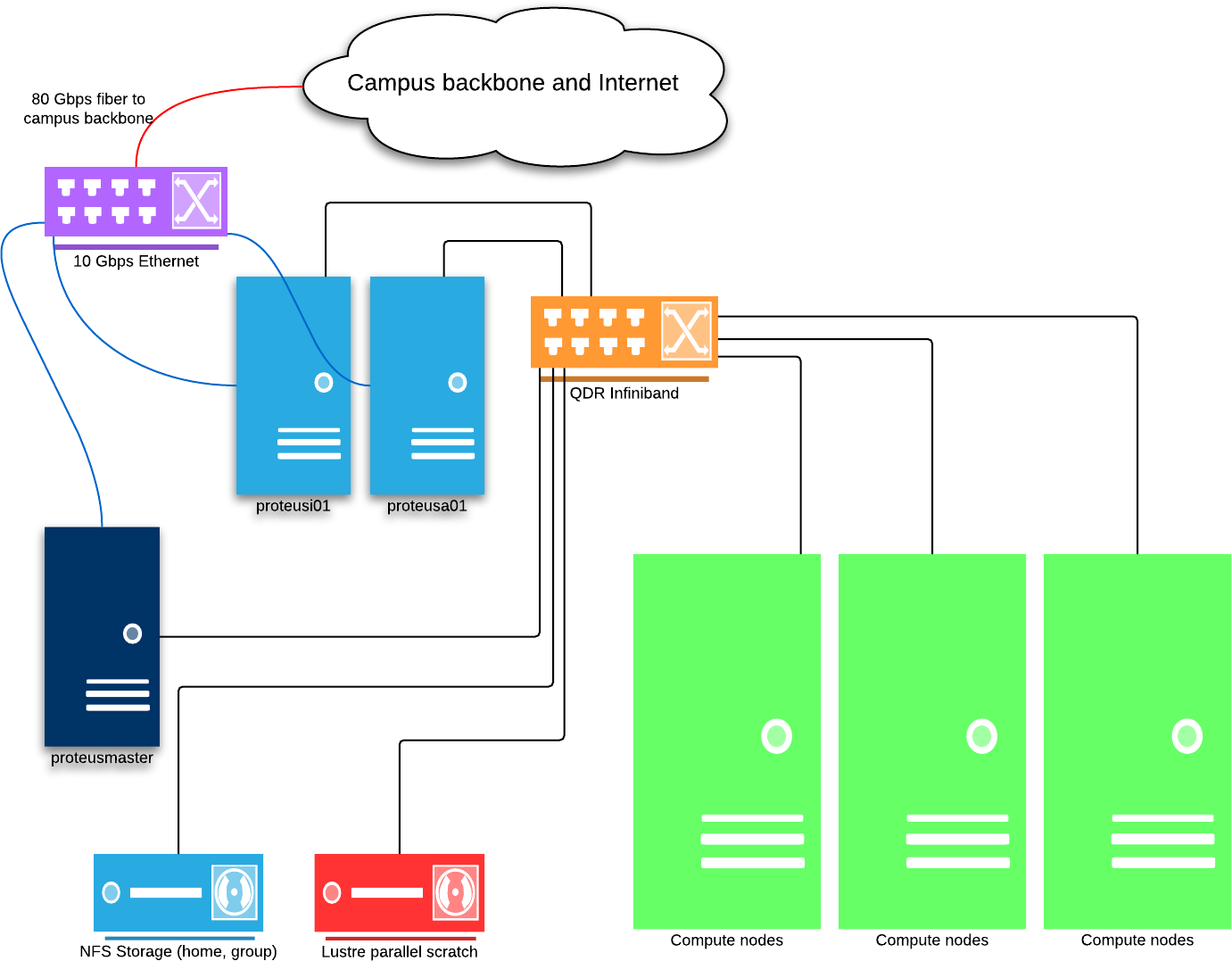

Schematic

This is a simplified schematic of Proteus. Some elements are not shown: the gigabit Ethernet management network, and the internal structure of the two storage devices.

ERRATUM: the server room switch is 100 (hundred) Gbps Ethernet, not 10 Gbps as shown.