Picotte Hardware and Software

Picotte is the new URCF HPC cluster.

Nodes

Management node - picottemgmt

- Model: Dell PowerEdge R640

- CPU: 2x Intel® Xeon® Platinum 8268 2.9GHz 24-core 35.75MB cache

- RAM: 384 GiB

- Storage:

- 2x 960 GB SSD 6Gbps SATA

- 2x 2TB HDD 7200rpm 6Gbps SATA

Login node - picottelogin

- Model: Dell PowerEdge R640

- CPU: 2x Intel® Xeon® Platinum 8268 2.9GHz 24-core 35.75MB cache

- RAM: 384 GiB

- Storage: 960 GB SSD 12Gbps SAS

Standard compute nodes (74 nodes)

- Model: Dell PowerEdge R640

- CPU: 2x Intel® Xeon® Platinum 8268 2.90 GHz 24-core 35.75 MB cache

- RAM: 192 GiB

- Storage: 960 GB SSD 12Gbps SAS

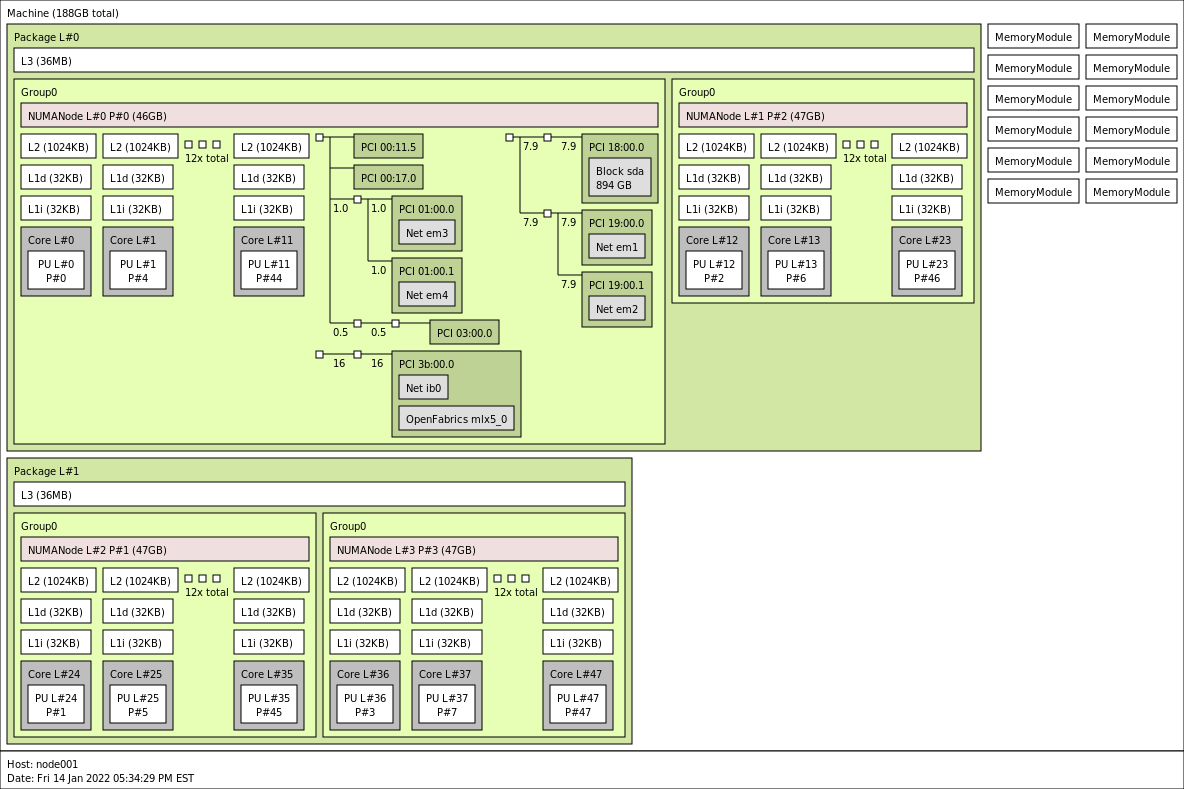

- Hardware topology:

Big memory compute nodes (2 nodes)

- Model: Dell PowerEdge R640

- CPU: 2x Intel® Xeon® Platinum 8268 2.90 GHz 24-core 35.75 MB cache

- RAM: 1536 GiB

- Storage: 960 GB SSD 12Gbps SAS

GPU compute nodes (12 nodes)

- Model: Dell PowerEdge C4140

- CPU: 2x Intel® Xeon® Platinum 8260 2.40 GHz 24-core 35.75 MB cache

- GPU: 4x NVIDIA Tesla V100-SXM2 (NVLink) 32GB (Volta)

- RAM: 192 GIB

- Storage: 2x 960 GB SSD 6Gbps SATA

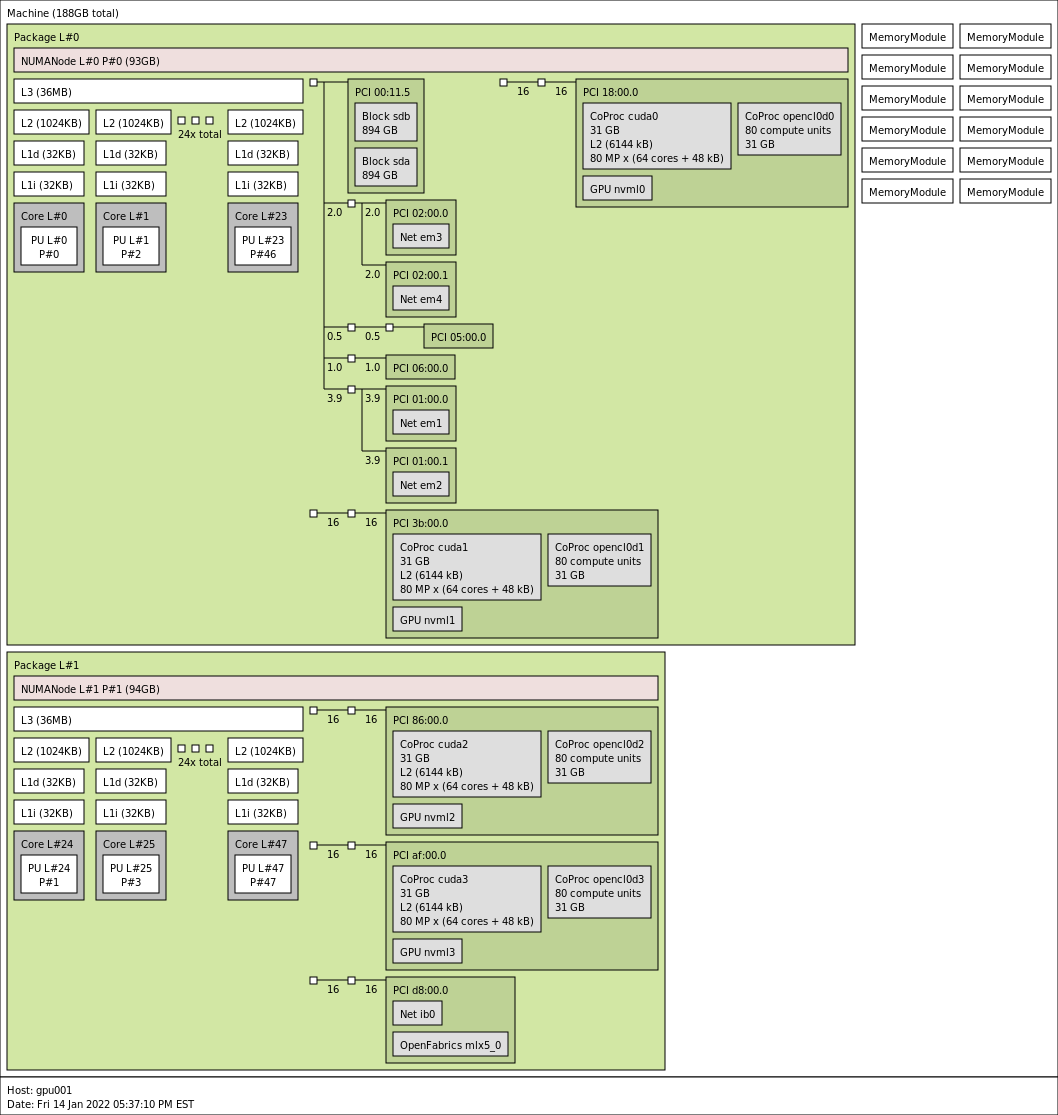

- Hardware topology:

Storage

Parallel Scratch Storage

- BeeGFS on 4 Dell servers

- Total useable volume: 175 TB

- Connected to cluster via 100 Gbps HDR InfiniBand

Persistent Storage

- Isilon scale-out storage

- Total useable volume: 649 TB

- 7.2 TB SSD caching

- Connected to cluster using NFS via 6x 10 Gbps Ethernet

- Connected to campus using SMB (Windows file sharing) via 2x 10 Gbps Ethernet

- Total useable volume: 649 TB

Local Scratch Storage

- 960 GB or 1920 GB SSD

Network Fabrics

High Performance Cluster Network

- Mellanox HDR Infiniband @ 100 Gbps, latency < 0.2 μs

General Purpose Cluster Network

- 10 Gbps ethernet

Software

- Operating system: Red Hat Enterprise Linux 8

- Job scheduler: Slurm

- Development tools: GCC 9.2, Intel compiler suite 2020 + MKL, CUDA 11.x

Theoretical Peak Performance

The theoretical peak performance adds up the theoretical peak performance of all the individual processors or GPU devices. It does not take into account any effects that occur during actual computation. Excluded are the login and management nodes. Performance is measured in number of floating point operations per second (FLOPS).

Theoretical performance of individual CPUs or GPUs:

- Standard nodes ("def" partition) - Intel Xeon Platinum 8268[1]: 1459.2 GFLOPS (152 sockets total)

- Big memory nodes ("bm" partition) - Intel Xeon Platinum 8260[2]: 1152.0 GFLOPS (24 sockets total)

- GPU devices ("gpu" partition) - Nvidia Tesla V100 for NVLink[3]: 15,700 GFLOPS (48 devices total)

Total theoretical peak performance: 1.0 PFLOPS (1,003,046.4 GFLOPS)

Benchmark Results

- Benchmarked by Dell using HPL (High-Performance

Linpack), and CUDA-enabled

HPL for GPU nodes

- all standard nodes: 145.88 TFLOPS

- all big memory nodes: 5.54 TFLOPS

- all GPU nodes: 251.00 TFLOPS

References

[1] Export Compliance Metrics for Intel Xeon Processors (PDF)

[2]