IO Tests

This is a series of non-rigorous benchmarks comparing i/o (writes) to Lustre, NFS, and local disk. These were done during normal operation, i.e. there were other jobs on Proteus.

25 tasks, each writing 20 GiB of data in 2 MiB blocks, were run concurrently. Tasks were distributed such that one task only used one node, and used it exclusively (i.e. tasks did not share nodes with other tasks, or jobs by other users).

The Lustre tests were done to striped directories (12 stripes), and unstriped directories. See Lustre Scratch Filesystem#File Striping

Job Script

This is the job script which does the test.

NB actual run has changed such that each individual storage type is tested in a separate job.

#!/bin/bash

#$ -S /bin/bash

#$ -P urcfadmPrj

#$ -cwd

#$ -j y

#$ -l h_rt=6:00:00

#$ -t 1:25

#$ -l exclusive

#$ -l vendor=intel

#$ -q all.q

. /etc/profile.d/modules.sh

module load shared

module load proteus

module load gcc

module load sge/univa

STRIPED=/lustre/scratch/dwc62/iotest_striped

UNSTRIPED=/lustre/scratch/dwc62/iotest_unstriped

### total data = 2 M * 20480 * 25 = 1000 GiB

bs="2M"

count="20480"

outputfile=${STRIPED}/ddtest.${JOB_ID}.${SGE_TASK_ID}

echo "Writing 40 GiB of zeroes to striped Lustre - task ID ${SGE_TASK_ID} ..."

time dd bs=${bs} count=${count} if=/dev/zero of=${outputfile}

/bin/ls -l ${outputfile}

/bin/rm -f ${outputfile}

echo "Writing 40 GiB of random data to striped Lustre - task ID ${SGE_TASK_ID} ..."

time dd bs=${bs} count=${count} if=/dev/urandom of=${outputfile}

/bin/ls -l ${outputfile}

/bin/rm -f ${outputfile}

sleep 5m

outputfile=${UNSTRIPED}/ddtest.${JOB_ID}.${SGE_TASK_ID}

echo "Writing 40 GiB of zeroes to unstriped Lustre - task ID ${SGE_TASK_ID} ..."

time dd bs=${bs} count=${count} if=/dev/zero of=${outputfile}

/bin/ls -l ${outputfile}

/bin/rm -f ${outputfile}

echo "Writing 40 GiB of random data to unstriped Lustre - task ID ${SGE_TASK_ID} ..."

time dd bs=${bs} count=${count} if=/dev/urandom of=${outputfile}

/bin/ls -l ${outputfile}

/bin/rm -f ${outputfile}

sleep 5m

outputfile=~/Tmp/iotest/ddtest.${JOB_ID}.${SGE_TASK_ID}

echo "Writing 40 GiB of zeroes to NFS - task ID ${SGE_TASK_ID} ..."

time dd bs=${bs} count=${count} if=/dev/zero of=${outputfile}

/bin/ls -l ${outputfile}

/bin/rm -f ${outputfile}

echo "Writing 20 GiB of random data to NFS - task ID ${SGE_TASK_ID} ..."

time dd bs=${bs} count=${count} if=/dev/urandom of=${outputfile}

/bin/ls -l ${outputfile}

/bin/rm -f ${outputfile}

sleep 5m

outputfile=${TMP}/ddtest.${JOB_ID}.${SGE_TASK_ID}

echo "Writing 40 GiB of zeroes to local scratch - task ID ${SGE_TASK_ID} ..."

time dd bs=${bs} count=${count} if=/dev/zero of=${outputfile}

/bin/ls -l ${outputfile}

/bin/rm -f ${outputfile}

echo "Writing 40 GiB of random data to local scratch - task ID ${SGE_TASK_ID} ..."

time dd bs=${bs} count=${count} if=/dev/urandom of=${outputfile}

/bin/ls -l ${outputfile}

/bin/rm -f ${outputfile}

Results

Writing Zeros

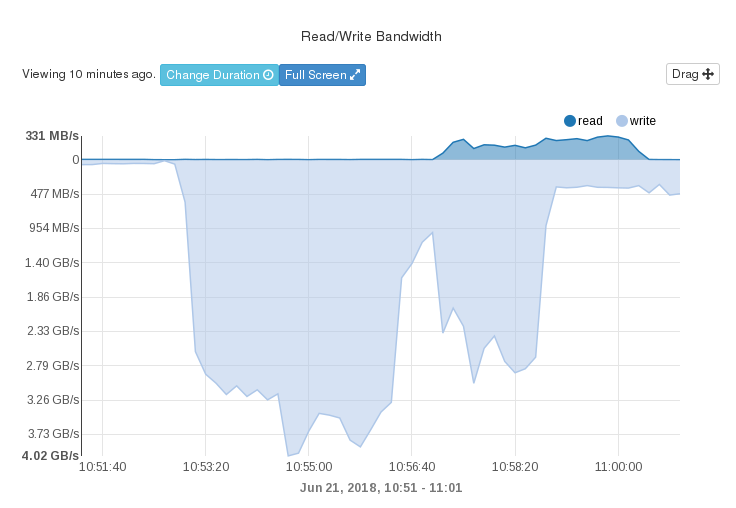

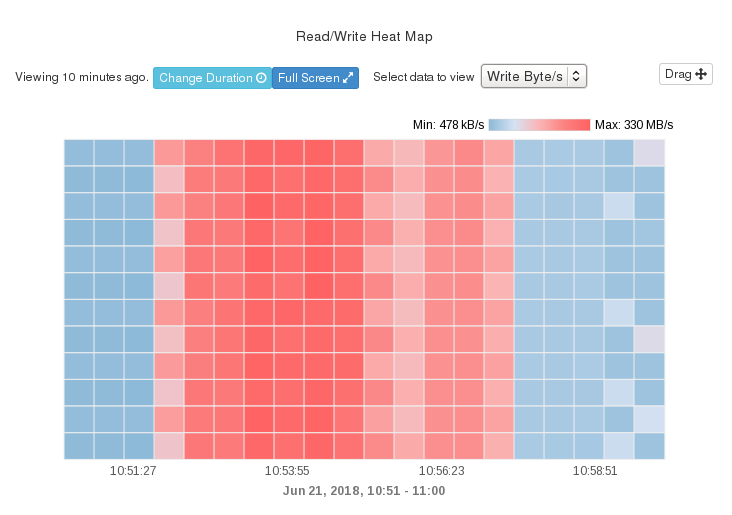

Lustre striped

Write bandwidth up to about 4 GB/s.

Heat map shows activity to each of the 12 object storage targets (i.e. storage device which holds data, as opposed to metadata).

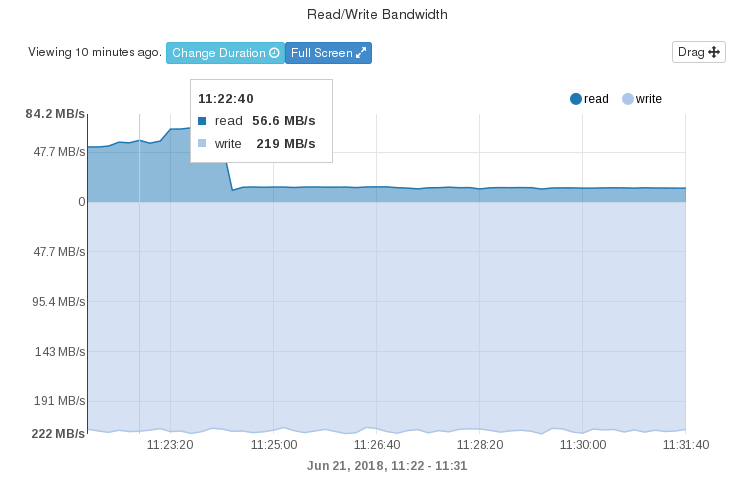

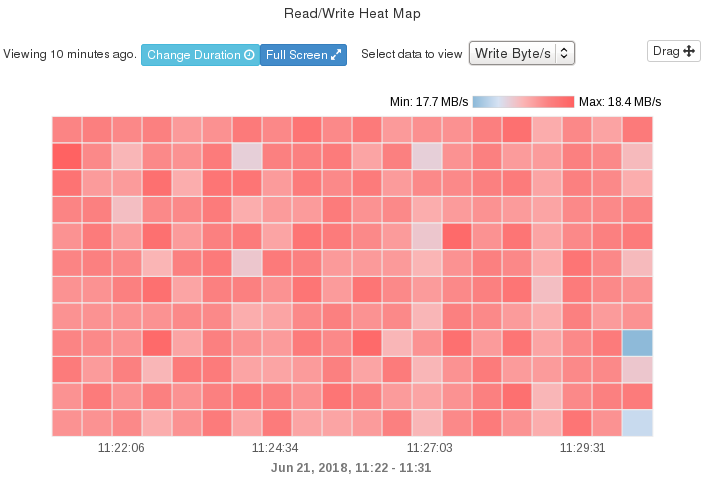

Lustre not striped

NFS

Local disk

Writing Random Data

Lustre striped